Top Mistakes in AI Music Generation

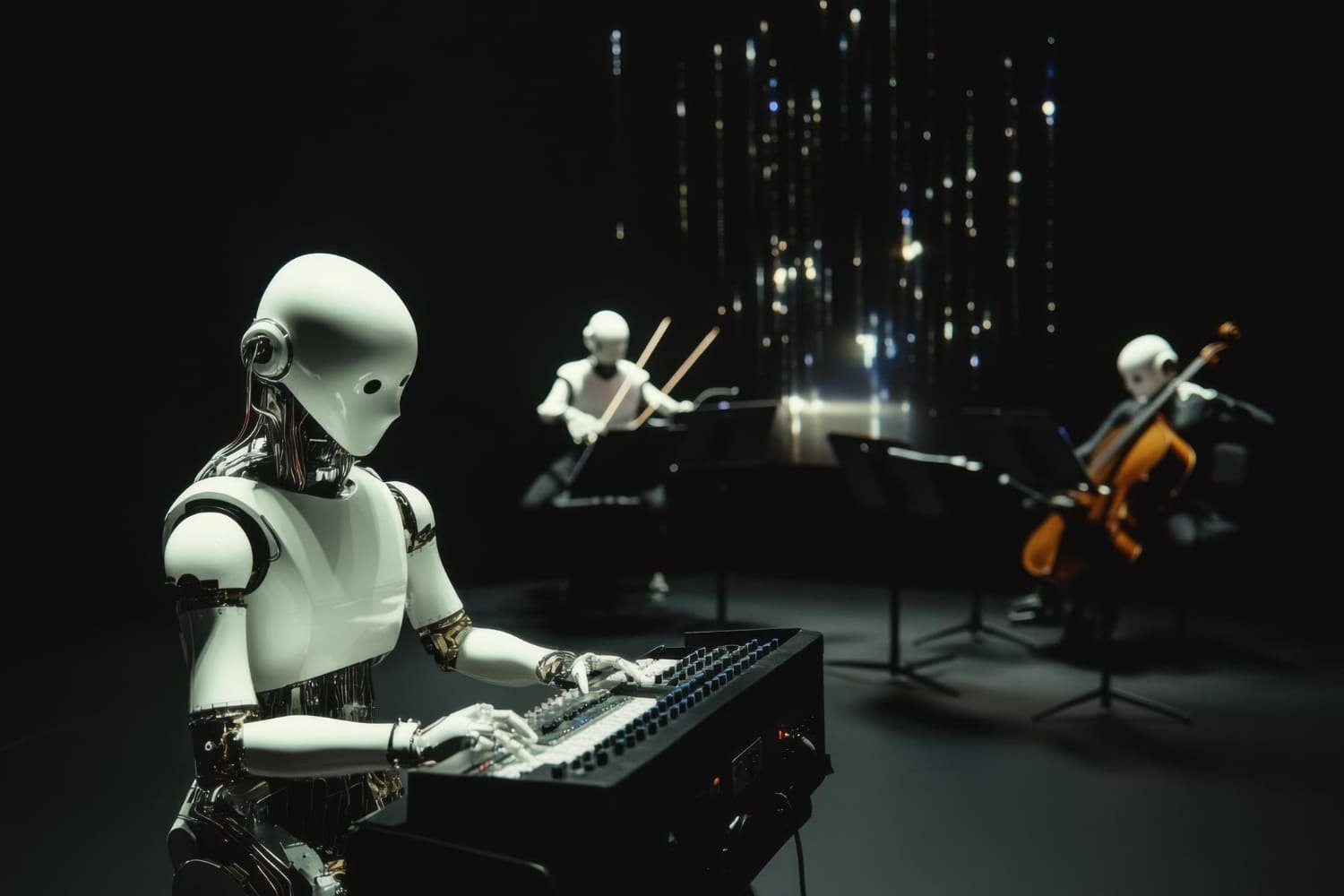

Artificial Intelligence (AI) is revolutionizing the music creation process, offering unprecedented opportunities for composers, producers, and enthusiasts alike. However, despite initial excitement, many users who actively engage with these tools encounter significant challenges, often resulting in flat or unpredictable outputs.

Mistake #1: The “Good Music” Trap

A common mistake when working with AI music generators is using overly broad or vague prompts. Unlike human collaborators who can intuitively grasp intent, AI models are literal interpreters. General instructions like “good music” or “happy song” fail to yield meaningful results, as AI lacks the intuition to transform abstract emotions into concrete musical elements. This often leads to random, incoherent, or emotionally flat results that don’t match the user’s vision.

Music, by its very nature, is complex: it is sequential, multilayered, and requires long-term coherence. It has a “low tolerance for error.” Even slight ambiguity in a prompt can result in repetitive or inconsistent output, as AI struggles to reproduce the intricate temporal structures required for a cohesive song. AI interprets music by relying on visual representations—such as spectrograms—to analyze, categorize, and predict musical features. This means that AI fundamentally depends on precision. Vague instructions produce random or shallow results because AI cannot infer intent; it needs clear, structured guidance that aligns with its analytical processing methods.

Mistake #2: The “One-Time Miracle” Fallacy

Unrealistic expectations are another common pitfall in AI-driven music generation. Creating music with AI is rarely a one-click process. Initial outputs—though often impressive—are typically raw and require refinement to align with a specific vision. AI models exhibit “response variability,” meaning small prompt adjustments can significantly change the outcome. Expecting perfection on the first attempt is unrealistic given this inherent trait.

AI generation should be seen as a dynamic conversation rather than a static monologue. The natural variability in AI’s responses means that initial results are starting points only, requiring an ongoing cycle of feedback and iteration to achieve the desired outcome.

How to Avoid These Pitfalls: Embrace the Iterative Process

To achieve desired results, it’s essential to adopt an iterative approach.

Start broad, then refine: Begin with more open-ended prompts to understand how the AI interprets your general intent. Based on the quality and direction of those initial outputs, gradually refine your prompts by adding more specific details and constraints.

Use feedback loops: Continuously adjust your prompts based on what the AI produces. Treat each generation as feedback. If the AI misses the mark, rephrase your prompt or build on the previous one. Each iteration should bring you closer to clarity and alignment with your creative vision.

Systematically test prompt variations: Experiment with different tones, structures, and levels of detail in your prompts (e.g., “Generate a bullet list of pain points” vs. “Write a paragraph capturing emotional highs and lows”). Track what works and what doesn’t to build a “library of successful prompt patterns” for future projects.

For deeper control, consider using advanced prompting techniques:

Chain-of-thought prompting: Break complex musical tasks into logical, sequential steps for the AI. Instead of a single prompt for the entire song, structure it like:

“Step 1: Create an intro with a melancholic piano melody.

Step 2: Develop a verse with a driving drum rhythm and emotional male vocals.

Step 3: Transition to a powerful chorus with lush orchestral strings.”

This helps the AI maintain coherence and structure across longer compositions.

Reflection prompting: Ask the AI to evaluate or improve its own generated music or a specific section. For example, after generation, you could ask:

“Reassess this chorus. Does it feel uplifting enough? Suggest improvements to enhance the emotional impact.”

This encourages self-correction and refinement, leading to more nuanced results.

These advanced prompting strategies turn the user-AI interaction from a simple command-response exchange into a more sophisticated “thinking partnership.” By guiding the AI’s “reasoning process,” users gain significantly greater control over musical development and the overall coherence of the output.

Mistake #3: The “AI Does It All” Assumption

While AI can generate impressive musical ideas, raw AI-generated music often lacks the subtle nuances, emotional depth, and professional polish found in human-made tracks. Music production is an art form that demands near-perfect precision—even minor flaws can result in an unsatisfying listening experience.

AI-generated music may sometimes feel predictable or simplistic, seemingly repetitive, and may lack creativity because it wasn’t created from a human perspective. This can lead to an “uncanny valley” effect—music that’s close to human, but missing the subtle elements that evoke genuine emotional connection. AI should be seen as a powerful collaborator and enhancer, not a replacement for human creativity. This “uncanny valley” highlights that while AI is excellent for utilitarian music (e.g., jingles, background tracks), it struggles with authentic expression, which requires human intervention for artistic resonance and professional quality.

How to Avoid It: Treat AI as a Collaborative Tool

To create high-quality AI-assisted music, it’s essential to embrace AI as a collaborative instrument.

Plan for post-production: Raw AI outputs benefit greatly from traditional mixing and mastering methods. Apply professional mixing, dynamic processing, EQ adjustments for balance, and fine-tune transitions between sections. This elevates a generated idea into a polished musical piece.

Incorporate human-performed elements: Consider adding live instruments (e.g., a guitar solo, a unique drum fill) or human vocals to your AI-generated tracks. These elements can introduce authenticity, expressive uniqueness, and warmth—bridging the gap of the uncanny valley and making the music more relatable.

Use Digital Audio Workstations (DAWs): Export individual AI-generated stems (such as drums, bass, melody) and import them into a professional DAW (e.g., Ableton Live, Logic Pro, FL Studio). This allows for precise editing, layering, advanced effects, and song arrangement—capabilities often limited within AI generators themselves.

Refine using built-in editing tools: Many AI platforms are evolving to include professional editing studios, stem-separation technology, and fine-tuning capabilities for custom models. Use these built-in tools to polish your compositions, blend elements smoothly, and even teach the AI to better match your unique style.

Producing high-quality AI music requires a hybrid skill set that seamlessly blends AI generation with traditional audio engineering principles and human creative input. This holistic approach maximizes AI’s efficiency as a creative engine while preserving and enhancing artistic integrity and professional sound quality.

Mistake #4: The “Loop Trap”

A well-documented challenge for AI is maintaining long-term musical structure and avoiding repetitive or inconsistent output across a song’s duration. Unlike static images, music unfolds in time, with complex structures like verses, choruses, bridges, and breakdowns that AI models often struggle to organize and develop coherently.

Music’s sequential and time-dependent nature makes it significantly more difficult for AI to maintain internal consistency and narrative flow compared to generating single-frame visuals. This fundamental limitation often results in tracks that feel aimless, endlessly looping, or lacking clear progression.

How to Avoid It: Provide a Musical Blueprint

To overcome this issue, users need to provide the AI with a clear structural roadmap.

Define structure using meta-tags: Use explicit meta-tags in your prompts or lyric panels to guide the AI through the song’s progression. Tags like [Intro], [Verse], [Chorus], [Bridge], [Climax], and [Outro] serve as a structural framework, instructing the AI how to build distinct parts of the composition. This is key to avoiding aimless repetition and ensuring logical flow.

Generate in sections: Instead of trying to create an entire multi-minute piece in one go, break your composition into smaller, manageable parts (e.g., generate the intro, then the first verse, then the chorus). Create each section separately, focusing on refining its individual elements and ensuring smooth, logical transitions between sections. Once satisfied, stitch the segments together.

This segmented approach gives you greater control and allows you to iteratively improve each part, resulting in a more coherent overall structure.

Mistake #5: The “Copyright Blind Spot” – Ignoring Legal and Ethical Implications

The music industry is known for its aggressive rights enforcement and low tolerance for copyright misuse. AI models—especially those trained on large datasets of popular, commercially relevant music—inevitably ingest copyrighted material, creating significant legal risks and the potential for costly lawsuits for both developers and users. Cases against AI tools for training on protected music and mimicking the styles of famous artists highlight the seriousness of this risk.

The core copyright issue in AI music generation arises not only from the output, but more critically from the training data itself. Since the majority of commercially relevant music is copyrighted, this creates a widespread legal minefield for AI developers—and by extension, for users. This affects both the quality and diversity of the data available to train AI models, and—crucially—the legal usability of the music they generate.

Beyond legal risks, there are ethical concerns around AI’s potential to displace creators from their livelihoods by generating music faster and at much lower cost, potentially diluting the music industry by prioritizing profit over true artistic expression and authenticity.

How to Avoid It: Navigate the Legal Landscape Responsibly

Responsible navigation of the legal landscape is critical.

Avoid prompts referencing specific artists/songs/styles: Explicitly refrain from mentioning copyrighted artists, bands, or song titles in your prompts. This is a key step in minimizing the risk of producing infringing material that closely mimics protected works.

Choose legally compliant tools: Prioritize AI platforms that clearly state they use “cleared content” in their training data and that offer full commercial rights to end-users for the generated output. Platforms like Aimi stand out by proactively avoiding the legal minefield from the start through the use of pre-cleared content.

Proactively selecting an AI tool with a transparent and legally sound data acquisition strategy (e.g., using licensed or copyright-free content for training) is a vital measure. It significantly reduces legal risks for users—especially those aiming for commercial use—by shifting the burden from the user’s prompting choices to the platform’s ethical and legal foundation.

Conclusion

Mastering AI music generation is an evolving skill that requires going beyond basic prompts. Success depends on understanding the “language” of AI, embracing iterative refinement, collaborating with AI rather than expecting it to replace human creativity, providing clear structural guidance, and navigating the complex legal and ethical landscape responsibly.

The most impressive and unique outcomes will always come from a thoughtful blend of human creativity, artistic vision, and intelligent technological tools. The future of music is collaborative, and those who learn to effectively combine their creative instincts with the power of AI will be the ones creating truly exceptional work.

Keep experimenting, learning through practice, and pushing the boundaries of what’s possible with AI-powered music creation.

Learn and Discover

See All